The Dawn of AI-Powered Music Creation

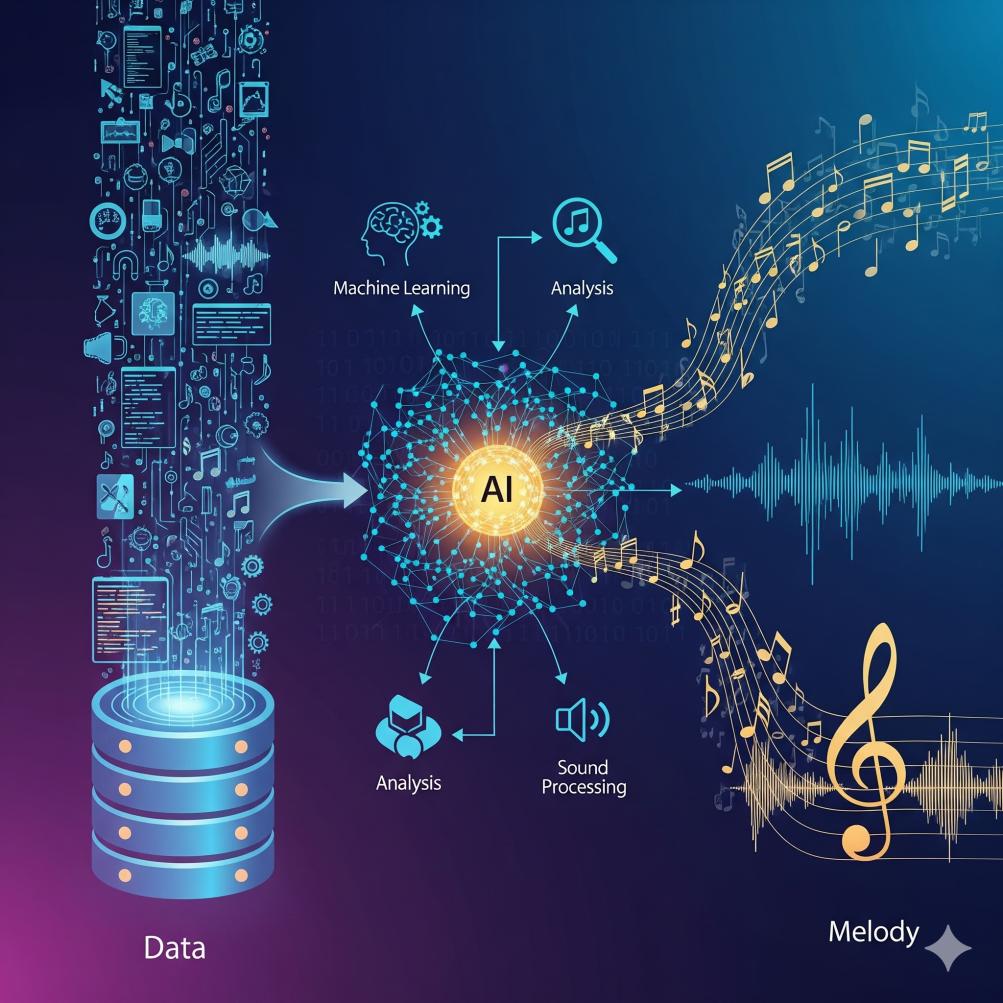

The year 2025 marks a pivotal moment in music history, where artificial intelligence has transcended from experimental tool to essential creative partner. Neural networks, particularly transformer architectures and diffusion models, are reshaping how we approach music composition, offering unprecedented capabilities that were once the realm of science fiction.

Transformer Models in Music Generation

Transformer architecture, originally designed for natural language processing, has found remarkable success in music generation. These models excel at understanding long-range dependencies in musical sequences, capturing the intricate relationships between notes, rhythms, and harmonic progressions that define compelling compositions.

Key advantages of transformer-based music AI:

- Superior pattern recognition across extended musical passages

- Ability to maintain musical coherence over long compositions

- Multi-instrument orchestration capabilities

- Style transfer between different musical genres

Diffusion Models: The New Frontier

Diffusion models represent the cutting edge of AI music generation, offering unprecedented control over the creative process. These models work by gradually transforming noise into coherent musical structures, allowing for fine-grained control over tempo, mood, instrumentation, and genre.

Unlike traditional approaches, diffusion models can generate high-quality audio directly, bypassing the need for MIDI conversion and maintaining the nuanced characteristics of real instruments and vocals.

Real-World Applications

Film Scoring: AI composers are now capable of creating adaptive soundtracks that respond to scene dynamics, generating music that seamlessly matches visual narratives.

Game Audio: Procedural music generation allows for dynamic soundtracks that evolve based on player actions and game states, creating immersive audio experiences.