Beyond Audio: The Rise of Contextual AI Music

The next frontier in AI music generation isn't just about creating better melodies or more realistic instruments—it's about understanding context. Multimodal AI systems are revolutionizing music creation by processing visual information, text descriptions, and emotional cues to generate music that's truly responsive to its environment and purpose.

Imagine uploading a photo of a sunset and instantly receiving a musical score that captures not just the visual beauty, but the emotional resonance of that moment. Or describing a scene in words and having AI compose a soundtrack that perfectly matches the narrative arc. This isn't science fiction—it's happening now.

Understanding Multimodal AI in Music

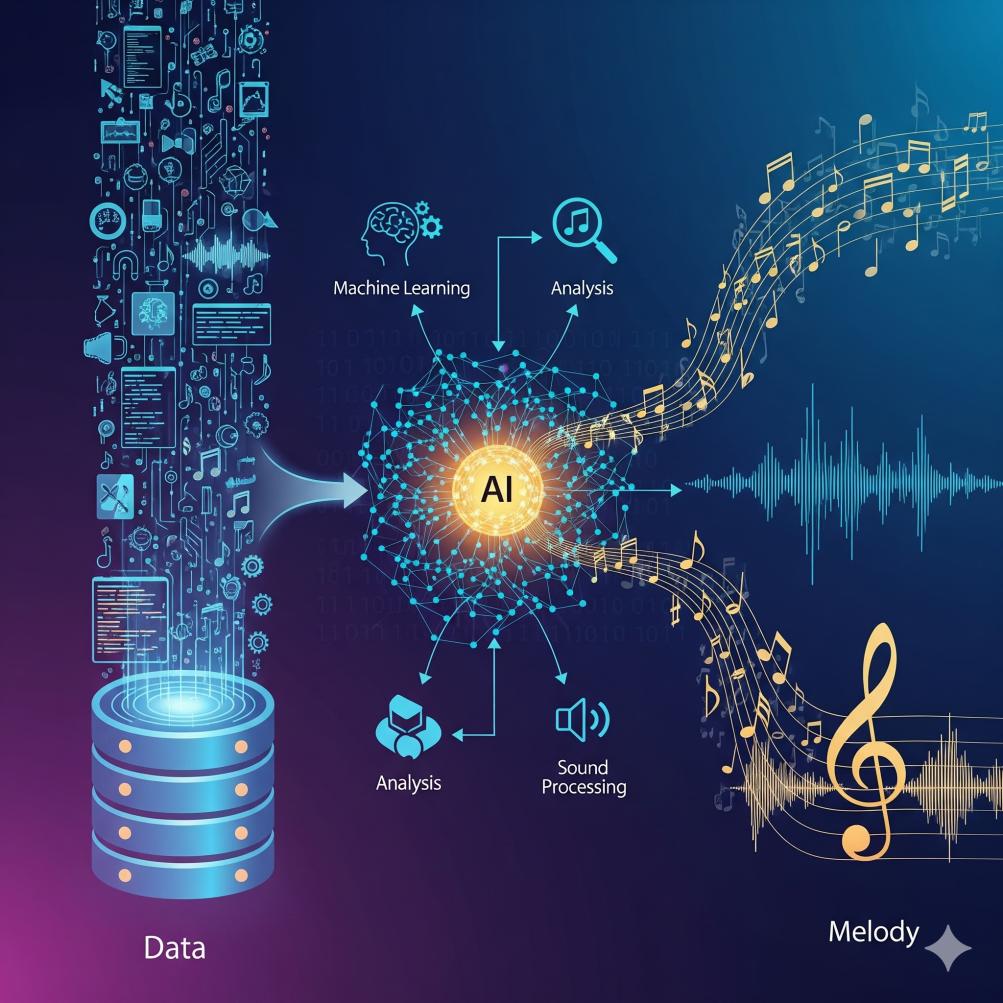

Multimodal AI systems process and integrate information from multiple input types simultaneously:

Input Modalities in Music AI:

- Visual: Images, videos, color palettes, art styles

- Textual: Descriptions, lyrics, stories, emotions

- Audio: Existing music, sound effects, ambient recordings

- Temporal: Time-based data, rhythm patterns, sequence information

- Contextual: Location, weather, social media data, biometric information

Output Capabilities:

- Adaptive soundtracks that change based on visual content

- Music that evolves with narrative progression

- Compositions tailored to specific emotional states

- Interactive audio experiences responsive to user behavior

Vision-to-Music: Translating Images into Sound

One of the most exciting developments is AI's ability to "see" music in images and translate visual elements into sonic landscapes.

Technical Approaches:

- Color-to-Tone Mapping: Converting color palettes to harmonic structures

- Composition Analysis: Translating visual composition rules to musical arrangement

- Emotional Recognition: Identifying mood in images and matching musical emotions

- Movement Detection: Converting visual motion to rhythmic patterns

Real-World Applications:

Film Scoring:

- Automatic generation of temp scores from rough cuts

- Real-time music adaptation based on scene analysis

- Consistent musical themes across visual motifs

- Cost-effective scoring for independent filmmakers

Social Media Content:

- Instagram posts generating matching background music

- TikTok videos with AI-composed soundtracks

- YouTube thumbnails influencing intro music

- Automatic playlist generation from photo albums

Art Installations:

- Museum exhibits with responsive soundscapes

- Gallery openings with music generated from displayed artworks

- Interactive installations that sonify visitor movements

Text-to-Music: From Words to Melodies

Natural language processing in music AI has evolved from simple keyword matching to sophisticated narrative understanding.

Advanced Capabilities:

Semantic Understanding:

- Analyzing emotional arc of written stories

- Identifying character themes and musical motifs

- Understanding tension and resolution in narratives

- Mapping dialogue to musical conversation patterns

Genre and Style Recognition:

- "Epic fantasy novel" → Orchestral compositions with medieval influences

- "Cyberpunk thriller" → Electronic music with industrial elements

- "Romantic comedy" → Light, playful melodies with jazz influences

- "Horror story" → Dissonant harmonies and tension-building techniques

Dynamic Adaptation:

- Music that evolves as text is typed in real-time

- Soundtracks that adjust based on reading speed

- Compositions that reflect the complexity of language used

- Musical punctuation that mirrors textual emphasis

Emotional Intelligence in AI Music

The most sophisticated multimodal systems incorporate emotional AI to create music that truly resonates with human feelings.

Emotion Detection Methods:

Facial Recognition:

- Real-time analysis of user expressions

- Music adaptation based on detected mood changes

- Personalized emotional response profiles

- Crowd emotion analysis for public installations

Text Sentiment Analysis:

- Deep analysis of written content for emotional undertones

- Recognition of sarcasm, irony, and complex emotions

- Cultural context understanding for appropriate musical response

- Temporal emotion tracking across long texts

Biometric Integration:

- Heart rate monitoring for stress/relaxation states

- Sleep pattern analysis for bedtime music generation

- Activity level tracking for workout playlists

- Environmental sensor integration (light, temperature, humidity)

Leading Multimodal AI Music Platforms

Commercial Solutions:

Mubert Studio Pro:

- Text-to-music generation with mood and genre controls

- Visual content analysis for automatic soundtrack creation

- Real-time adaptation based on user feedback

- Integration with video editing software

Soundraw Advanced:

- Image upload functionality for visual-to-audio conversion

- Natural language descriptions for complex musical requests

- Emotional state recognition through text analysis

- Multi-platform API for developer integration

AIVA Multimodal: